A data warehouse is a structure that contains several data collections, of different origins, with very large dimensions, used for decisions taken at a tactical and strategic level within an organization. Typically, they exist separately from operational databases. Before being transferred from data sources, the information is subjected to ETL (Extract, Transform, Load) processes through which it is filtered and processed to comply with certain conventions established when designing data warehouses. This technology is used in conjunction with data mining, so the volume of information stored in Snowflake data warehouses is used to generate knowledge, which is why the field that deals with such issues is called business intelligence.

Snowflake Data Warehouse:

- ensures access to the organization’s data in the shortest possible time; to achieve such a desideratum, the data are intentionally denormalized, so that data redundancy is allowed (as opposed to relational databases);

- allows the use of information contained without further processing because these processes were performed in the construction of the data warehouse (ETL mechanism – only after the quality of the data has been ensured, they can be used); in this respect, it is also particularly important that the data be consistent;

- uses historical data to identify trends that can then be used to make forecasts; therefore, any information will have an associated time attribute;

- uses information that is focused on the topics of the economic process (customers, suppliers, products) rather than on applications, as in the case of operational databases;

- performs extremely rare update operations, consisting exclusively in adding information to the data warehouse and not in any way modifying the existing ones;

- provides read-only data access.

Data Warehouse Architecture

Structurally, a Snowflake data warehouse can be described according to the components it has, depending on the levels it is organized as well as related to the functional architecture.

In terms of the components of the composite distinguish data sources, data repository and access tools (interface analysis).

In the category of data sources, an important contribution is made by the operational databases of the organization, to which is added the corresponding timestamp, as well as various archived information or external data, related to the economic field in which the India Snowflake consultants operates or on its customers and business partners.

As data sources are – in most cases – heterogeneous in nature, they must undergo some transformation processes before being actually transferred to the Snowflake data warehouses to be used either directly or in the form of aggregated information.

The ETL process therefore involves the following steps:

- extracting data from different sources and transferring them, using the same representation mechanism;

- data transformation: elimination of incorrect or incomplete data as well as those not relevant to the organization;

- uploading the data to the data warehouse, which will be used for aggregation operations; as a rule, aggregation operations target some criteria related to how the information will most likely be used in the context of the data warehouse.

Given these issues, an important issue is the mechanism of data integration, so that they ensure a consistent and coherent vision of the organization for the time horizon. The mentioned aspects are required therefore be put attention to:

- a unique coding mechanism (a system of codes for each field, the same for all records that have the respective meaning, regardless of their origin);

- the same system of units of measurement;

- a unique way of physical data organization resulting from the stabilization specified in the data warehouse design;

- Conventions for naming attributes.

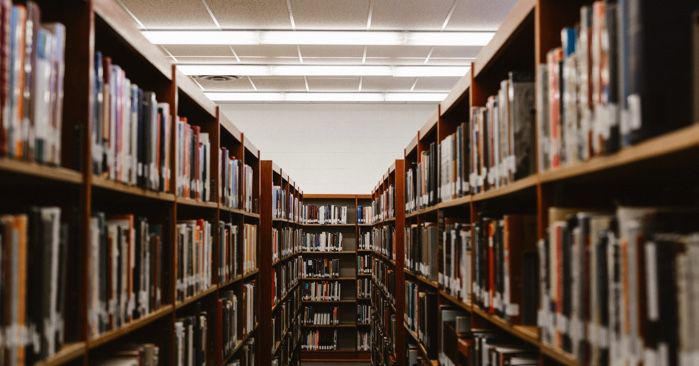

Thus stored in data warehouses (both data taken from different data sources and aggregated information), the information can be exploited in several ways, depending on the purpose for which they are intended to be used. You can use data marts that are made up of a certain segment of data warehouses tech news english, the knowledge of which is subject to processing through access tools (analysis interfaces) – software products that consider their analysis. Technologies such as data mining or OLAP are frequently used.